Cross-view Transformation based Sparse Reconstruction for Person Re-identification

Abstract

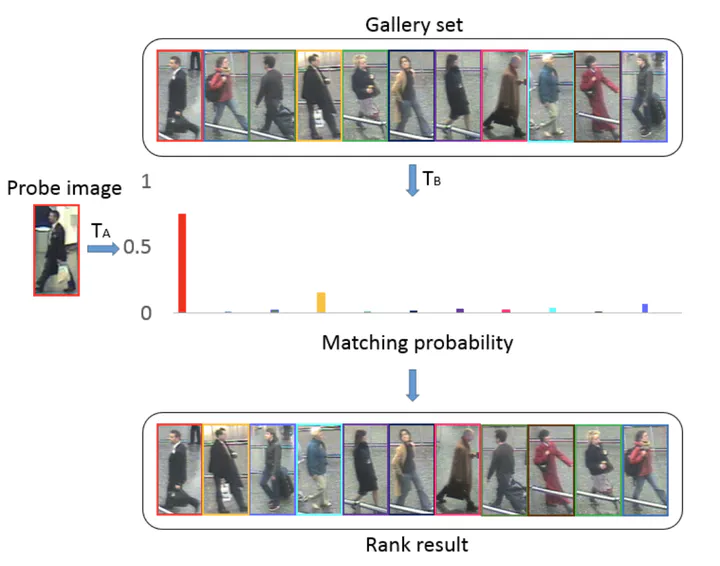

Based on minimum reconstruction error criterion and the intrinsic sparse property of natural data, sparse representation (SR) has shown promising performance on various image recognition tasks. However, in the field of person re-identification (re-id), the state-of-the-art is still dominated by other methods such as metric learning or CNN. It is because samples in one view may not be representative enough to represent samples from another view. As such, the reconstruction error could be excessive, and different pedestrians are indistinguishable with the coefficient produced by sparse representation. In this paper, we proposed an asymmetric sparse representation to address this problem. Samples of different camera views (gallery and probe samples) are mapped to a common latent space and the sparse coefficient is generated in this space. In this way, the representation power is enhanced and the sparse coefficient becomes more reliable. The similarities of different samples are determined by the enhanced sparse coefficient, which allows more discriminative matching across different camera views. Extensive experiments on CAVIAR4REID, iLIDS-VID and PRID 2011 datasets have demonstrated the merits of our approach.

Type

Publication

International Conference on Pattern Recognition